wgsl-analyzer

At its core, wgsl-analyzer is a library for semantic analysis of WGSL and WESL code as it changes over time.

This manual focuses on a specific usage of the library - running it as part of a server that implements the Language Server Protocol (LSP).

The LSP allows various code editors, such as VS Code, Emacs, or Vim to implement semantic features such as completion or goto definition by talking to an external language server process.

To improve this document, send a pull request: https://github.com/wgsl-analyzer/wgsl-analyzer.

The manual is written in markdown and includes some extra files which are generated from the source code.

Run cargo test and cargo xtask codegen to create these.

If you have a question about using wgsl-analyzer, please read the documentation.

If your question is not addressed, then ask it in the "discord".

Ideally, the documentation should address all usage questions.

Installation

To use wgsl-analyzer, you need a wgsl-analyzer binary and a text editor that supports LSP.

If you are using VS Code, the extension bundles a copy of the wgsl-analyzer binary.

For other editors, you will need to install the binary and configure your editor.

Crates

There is a package named wa_ap_wgsl-analyzer available on crates.io for people who want to use wgsl-analyzer programmatically.

For more details, see the publish workflow.

VS Code

This is the best supported editor at the moment.

The wgsl-analyzer plugin for VS Code is maintained in-tree.

You can install the latest release of the plugin from the marketplace.

The server binary is stored in the extension install directory, which starts with wgsl-analyzer.wgsl-analyzer- and is located in:

- Linux:

~/.vscode/extensions - Linux (Remote, such as WSL):

~/.vscode-server/extensions - macOS:

~/.vscode/extensions - Windows:

%USERPROFILE%\.vscode\extensions

As an exception, on NixOS, the extension makes a copy of the server and stores it in ~/.config/Code/User/globalStorage/wgsl-analyzer.wgsl-analyzer.

Note that we only support the two most recent versions of VS Code.

Updates

The extension will be updated automatically as new versions become available. It will ask your permission to download the matching language server version binary if needed.

Nightly

We ship nightly releases for VS Code. To help us out by testing the newest code, you can enable pre-release versions in the Code extension page.

Manual installation

Alternatively, download a VSIX corresponding to your platform from the releases page.

Install the extension with the Extensions: Install from VSIX command within VS Code, or from the command line via:

code --install-extension /path/to/wgsl-analyzer.vsix

If you are running an unsupported platform, you can install wgsl-analyzer-no-server.vsix and compile or obtain a server binary.

Copy the server anywhere, then add the path to your settings.json.

For example:

{ "wgsl-analyzer.server.path": "~/.local/bin/wgsl-analyzer-linux" }

Building From Source

Both the server and the Code plugin can be installed from source:

git clone https://github.com/wgsl-analyzer/wgsl-analyzer.git && cd wgsl-analyzer

cargo xtask install

You will need Cargo, Node.js (matching a supported version of VS Code) and npm for this.

Note that installing via xtask install does not work for VS Code Remote.

Instead, you will need to install the .vsix manually.

If you are not using Code, you can compile and install only the LSP server:

cargo xtask install --server

Make sure that .cargo/bin is in $PATH and precedes paths where wgsl-analyzer may also be installed.

VS Code or VSCodium in Flatpak

Setting up wgsl-analyzer with a Flatpak version of Code is not trivial because of the Flatpak sandbox. This prevents access to files you might want to import.

wgsl-analyzer Binary

Text editors require the wgsl-analyzer binary to be in $PATH.

You can download pre-built binaries from the releases page.

You will need to uncompress and rename the binary for your platform.

For example, on Mac OS:

- extract

wgsl-analyzer-aarch64-apple-darwin.gztowgsl-analyzer - make it executable

- move it into a directory in your

$PATH

On Linux, to install the wgsl-analyzer binary into ~/.local/bin, these commands should work:

mkdir -p ~/.local/bin

curl -L https://github.com/wgsl-analyzer/wgsl-analyzer/releases/latest/download/wgsl-analyzer-x86_64-unknown-linux-gnu.gz | gunzip -c - > ~/.local/bin/wgsl-analyzer

chmod +x ~/.local/bin/wgsl-analyzer

Make sure that ~/.local/bin is listed in the $PATH variable and use the appropriate URL if you are not on a x86-64 system.

You do not have to use ~/.local/bin, any other path like ~/.cargo/bin or /usr/local/bin will work just as well.

Alternatively, you can install it from source using the command below. You will need the latest stable version of the Rust toolchain.

git clone https://github.com/wgsl-analyzer/wgsl-analyzer.git && cd wgsl-analyzer

cargo xtask install --server

If your editor cannot find the binary even though the binary is on your $PATH, the likely explanation is that it does not see the same $PATH as the shell.

On Unix, running the editor from a shell or changing the .desktop file to set the environment should help.

Arch Linux

The wgsl-analyzer binary can be installed from the repos or AUR (Arch User Repository):

-

wgsl-analyzer(built from latest tagged source) -

wgsl-analyzer-git(latest Git version)

Install it with pacman, for example:

pacman -S wgsl-analyzer

Gentoo Linux

macOS

The wgsl-analyzer binary can be installed via Homebrew.

brew install wgsl-analyzer

Windows

The wgsl-analyzer binary can be installed via WinGet or Chocolatey.

winget install wgsl-analyzer

choco install wgsl-analyzer

Other Editors

wgsl-analyzer works with any editor that supports the Language Server Protocol.

This page assumes that you have already installed the wgsl-analyzer binary.

Emacs (using lsp-mode)

- Assumes you are using

wgsl-mode: https://github.com/acowley/wgsl-mode

-

Install the language server

cargo install --git https://github.com/wgsl-analyzer/wgsl-analyzer wgsl-analyzer -

Add the following to your init.el

(with-eval-after-load 'lsp-mode (add-to-list 'lsp-language-id-configuration '(wgsl-mode . "wgsl")) (lsp-register-client (make-lsp-client :new-connection (lsp-stdio-connection "wgsl-analyzer") :activation-fn (lsp-activate-on "wgsl") :server-id 'wgsl-analyzer)))

Eglot

Eglot is the more minimalistic and lightweight LSP client for Emacs, integrates well with existing Emacs functionality and is built into Emacs starting from release 29.

After installing Eglot, e.g. via M-x package-install (not needed from Emacs 29), you can enable it via the M-x eglot command or load it automatically in wgsl-mode via

(add-hook 'wgsl-mode-hook 'eglot-ensure)

For more detailed instructions and options see the Eglot manual (also available from Emacs via M-x info) and the Eglot readme.

Eglot does not support the wgsl-analyzer extensions to the language-server protocol and does not aim to do so in the future.

The eglot-x package adds experimental support for those LSP extensions.

LSP Mode

LSP-mode is the original LSP-client for emacs. Compared to Eglot it has a larger codebase and supports more features, like LSP protocol extensions. With extension packages like LSP UI it offers a lot of visual eyecandy. Further it integrates well with DAP mode for support of the Debug Adapter Protocol.

You can install LSP-mode via M-x package-install and then run it via the M-x lsp command or load it automatically in WGSL/WESL buffers with

(add-hook 'wgsl-mode-hook 'lsp-deferred)

For more information on how to set up LSP mode and its extension package see the instructions in the LSP mode manual.

Also see the wgsl-analyzer section for wgsl-analyzer specific options and commands, which you can optionally bind to keys.

Vim/Neovim

There are several LSP client implementations for Vim or Neovim:

Using coc-wgsl-analyzer

-

Install coc.nvim by following the instructions at coc.nvim (Node.js required)

-

Run

:CocInstall coc-wgsl-analyzerto installcoc-wgsl-analyzer, this extension implements most of the features supported in the VS Code extension:- automatically install and upgrade stable/nightly releases

- same configurations as VS Code extension,

wgsl-analyzer.server.path,wgsl-analyzer.cargo.featuresetc. - same commands too,

wgsl-analyzer.analyzerStatus,wgsl-analyzer.ssretc. - inlay hints for variables and method chaining, Neovim Only

[!NOTE]

coc-wgsl-analyzeris capable of installing or updating thewgsl-analyzerbinary on its own.

[!NOTE] for code actions, use

coc-codeaction-cursorandcoc-codeaction-selected;coc-codeactionandcoc-codeaction-lineare unlikely to be useful.

Using LanguageClient-neovim

-

Install LanguageClient-neovim by following the instructions

- The GitHub project wiki has extra tips on configuration

-

Configure by adding this to your Vim/Neovim config file (replacing the existing WGSL or WESL-specific line if it exists):

let g:LanguageClient_serverCommands = { \ 'wgsl': ['wgsl-analyzer'], \ 'wesl': ['wgsl-analyzer'], \ }

Using lsp

-

Install the

wgsl-analyzerlanguage server -

Configure the

.wgsland.weslfiletypeCreate

/ftdetect/wgsl.luaand/ftdetect/wesl.luain your neovim configuration.vim.api.nvim_create_autocmd({ "BufRead", "BufNewFile" }, { pattern = "*.wgsl", command = "setfiletype wgsl" })vim.api.nvim_create_autocmd({ "BufRead", "BufNewFile" }, { pattern = "*.wesl", command = "setfiletype wesl" }) -

Configure the nvim lsp

local lspconfig = require('lspconfig') lspconfig.wgsl_analyzer.setup({})

Using coc.nvim

- Requires CoC to be installed: https://github.com/neoclide/coc.nvim

- Requires cargo to be installed to build binaries:

-

Install the language server

cargo install --git https://github.com/wgsl-analyzer/wgsl-analyzer.git wgsl-analyzer(if you are not familiar with using and setting up cargo, you might run into problems finding your binary. Ensure that $HOME/.cargo/bin is in your $PATH. More Info about $PATH: https://linuxconfig.org/linux-path-environment-variable)

-

open Neovim / Vim and type

:CocConfigto configure coc.nvim. -

under

.languageserver: { ... }create a new field"wgsl-analyzer-language-server". The field should look like this:// { // "languageserver": { "wgsl-analyzer-language-server": { "command": "wgsl-analyzer", // alternatively you can specify the absolute path to your binary. "filetypes": ["wgsl", "wesl"], }, // ... // } -

In order for your editor to recognize WGSL files as such, you need to put this into your

vim.rc" Recognize wgsl au BufNewFile,BufRead *.wgsl set filetype=wgsl

Using nvim-cmp/cmp_nvim_lsp

Requires nvim-cmp and cmp_nvim_lsp.

-

Your existing setup should look similar to this:

local capabilities = vim.lsp.protocol.make_client_capabilities() capabilities = vim.tbl_deep_extend("force", capabilities, require("cmp_nvim_lsp").default_capabilities()) local lspconfig = require("lspconfig") -

Pass capabilities to the

wgsl-analyzersetup:lspconfig.wgsl_analyzer.setup({ filetypes = { "wgsl", "wesl" }, capabilities = capabilities, })

YouCompleteMe

Install YouCompleteMe by following the instructions.

wgsl-analyzer is the default in ycm, it should work out of the box.

ALE

To use the LSP server in ale:

let g:ale_linters = {'wgsl': ['analyzer'], 'wesl': ['analyzer']}

nvim-lsp

Neovim 0.5 has built-in language server support.

For a quick start configuration of wgsl-analyzer, use neovim/nvim-lspconfig.

Once neovim/nvim-lspconfig is installed, use lua require'lspconfig'.wgsl_analyzer.setup({}) in your init.vim.

You can also pass LSP settings to the server:

lua << EOF

local lspconfig = require'lspconfig'

local on_attach = function(client)

require'completion'.on_attach(client)

end

lspconfig.wgsl_analyzer.setup({

on_attach = on_attach,

settings = {

["wgsl-analyzer"] = {

}

}

})

EOF

If you are running Neovim 0.10 or later, you can enable inlay hints via on_attach:

lspconfig.wgsl_analyzer.setup({

on_attach = function(client, bufnr)

vim.lsp.inlay_hint.enable(true, { bufnr = bufnr })

end

})

Note that the hints are only visible after wgsl-analyzer has finished loading and you have to edit the file to trigger a re-render.

vim-lsp

vim-lsp is installed by following the plugin instructions.

It can be as simple as adding this line to your .vimrc:

Plug 'prabirshrestha/vim-lsp'

Next you need to register the wgsl-analyzer binary.

If it is available in $PATH, you may want to add this to your .vimrc:

if executable('wgsl-analyzer')

au User lsp_setup call lsp#register_server({

\ 'name': 'wgsl-analyzer Language Server',

\ 'cmd': {server_info->['wgsl-analyzer']},

\ 'whitelist': ['wgsl', 'wesl'],

\ })

endif

There is no dedicated UI for the server configuration, so you would need to send any options as a value of the initialization_options field, as described in the Configuration section.

Here is an example of how to enable the proc-macro support:

if executable('wgsl-analyzer')

au User lsp_setup call lsp#register_server({

\ 'name': 'wgsl-analyzer Language Server',

\ 'cmd': {server_info->['wgsl-analyzer']},

\ 'whitelist': ['wgsl', 'wesl'],

\ 'initialization_options': {

\ 'cargo': {

\ 'buildScripts': {

\ 'enable': v:true,

\ },

\ },

\ 'procMacro': {

\ 'enable': v:true,

\ },

\ },

\ })

endif

Sublime Text

Sublime Text 4

Follow the instructions in LSP-rust-analyzer, but substitute rust with wgsl where applicable.

Install LSP-file-watcher-chokidar to enable file watching (workspace/didChangeWatchedFiles).

Sublime Text 3

- Install the LSP package.

- From the command palette, run

LSP: Enable Language Server Globallyand selectwgsl-analyzer.

If it worked, you should see "wgsl-analyzer, Line X, Column Y" on the left side of the status bar, and after waiting a bit, functionalities like tooltips on hovering over variables should become available.

If you get an error saying No such file or directory: 'wgsl-analyzer', see the wgsl-analyzer binary installation section.

GNOME Builder

No support.

Eclipse IDE

No support.

Kate Text Editor

Support for the language server protocol is built into Kate through the LSP plugin, which is included by default.

To change wgsl-analyzer config options, start from the following example and put it into Kate's "User Server Settings" tab (located under the LSP Client settings):

{

"servers": {

"wgsl": {

"command": ["wgsl-analyzer"],

"url": "https://github.com/wgsl-analyzer/wgsl-analyzer",

"highlightingModeRegex": "^WGSL$"

},

"wesl": {

"command": ["wgsl-analyzer"],

"url": "https://github.com/wgsl-analyzer/wgsl-analyzer",

"highlightingModeRegex": "^WESL$"

}

}

}

Then click on apply, and restart the LSP server for your WGSL code or WESL project.

juCi++

juCi++ has built-in support for the language server protocol.

Kakoune

Kakoune supports LSP with the help of kak-lsp.

Follow the instructions to install kak-lsp.

To configure kak-lsp, refer to the configuration section.

It is about copying the configuration file to the right place.

The latest versions should use wgsl-analyzer by default.

Finally, you need to configure Kakoune to talk to kak-lsp (see Usage section).

A basic configuration will only get you LSP but you can also activate inlay diagnostics and auto-formatting on save.

The following might help you understand all of this:

eval %sh{kak-lsp --kakoune -s $kak_session} # Not needed if you load it with plug.kak.

hook global WinSetOption filetype=(wgsl|wesl) %{

# Enable LSP

lsp-enable-window

# Auto-formatting on save

hook window BufWritePre .* lsp-formatting-sync

# Configure inlay hints (only on save)

hook window -group wgsl-inlay-hints BufWritePost .* wgsl-analyzer-inlay-hints

hook -once -always window WinSetOption filetype=.* %{

remove-hooks window wgsl-inlay-hints

}

}

Helix

Helix supports LSP by default.

However, it will not install wgsl-analyzer automatically.

You can follow instructions for installing the wgsl-analyzer binary.

Visual Studio 2022

No support.

Lapce

No support.

Zed

No support.

IntelliJ IDEs

This includes:

- IntelliJ IDEA Ultimate

- WebStorm

- PhpStorm

- PyCharm Professional

- DataSpell

- RubyMine

- CLion

- Aqua

- DataGrip

- GoLand

- Rider

- RustRover

No support.

See #207

Troubleshooting

Start with looking at the wgsl-analyzer version.

Try the wgsl-analyzer: Show WA Version command in the Command Palette.

(Open the command pallete with Ctrl+Shift+P)

You can also run wgsl-analyzer --version in the command line.

If the date is more than a week ago, it is better to update your installation of wgsl-analyzer to the newest version.

The next thing to check would be panic messages in wgsl-analyzer's log.

Log messages are printed to stderr, in VS Code you can see them in the Output > wgsl-analyzer Language Server tab of the panel.

To see more logs, set the WA_LOG=info environment variable, this can be done either by setting the environment variable manually or by using wgsl-analyzer.server.extraEnv.

Note that both of these approaches require the server to be restarted.

To fully capture LSP messages between the editor and the server, run the wgsl-analyzer: Toggle LSP Logs command and check Output > wgsl-analyzer Language Server Trace.

The root cause for many "nothing works" problems is that wgsl-analyzer fails to understand the project structure.

To debug that, first note the wgsl-analyzer section in the status bar.

If it has an error icon and red, that is the problem (hover will have somewhat helpful error message).

wgsl-analyzer: Status prints dependency information for the current file.

Finally, WA_LOG=project_model=debug enables verbose logs during project loading.

If wgsl-analyzer outright crashes, try running wgsl-analyzer analysis-stats /path/to/project/directory/ on the command line.

This command type checks the whole project in batch mode bypassing LSP machinery.

When filing issues, it is useful (but not necessary) to try to minimize examples.

An ideal bug reproduction looks like this:

$ git clone https://github.com/username/repo.git && cd repo && git switch --detach commit-hash

$ wgsl-analyzer --version

wgsl-analyzer dd12184e4 2021-05-08 dev

$ wgsl-analyzer analysis-stats .

💀 💀 💀

It is especially useful when the repo does not use external crates or the standard library.

If you want to go as far as to modify the source code to debug the problem, be sure to take a look at the dev docs!

Configuration

Source: config.rs

The Installation section contains details on configuration for some of the editors.

In general, wgsl-analyzer is configured via LSP messages, which means that it is up to the editor to decide on the exact format and location of configuration files.

Some editors, such as VS Code or COC plugin in Vim, provide wgsl-analyzer-specific configuration UIs.

Other editors may require you to know a bit more about the interaction with wgsl-analyzer.

For the latter category, it might help to know that the initial configuration is specified as a value of the initializationOptions field of the InitializeParameters message, in the LSP protocol.

The spec says that the field type is any?, but wgsl-analyzer is looking for a JSON object that is constructed using settings from the list below.

The name of the setting, ignoring the wgsl-analyzer. prefix, is used as a path, and the value of the setting becomes the JSON property value.

Please consult your editor's documentation to learn more about how to configure LSP servers.

To verify which configuration is actually used by wgsl-analyzer, set the WA_LOG environment variable to wgsl_analyzer=info and look for config-related messages.

Logs should show both the JSON that wgsl-analyzer sees as well as the updated config.

This is the list of config options wgsl-analyzer supports:

Security

At the moment, wgsl-analyzer assumes that all code is trusted.

Here is a non-exhaustive list of ways to make wgsl-analyzer execute arbitrary code:

-

VS Code plugin reads configuration from project directory, and that can be used to override paths to various executables, like

wgslfmtorwgsl-analyzeritself. -

wgsl-analyzer's syntax trees library uses a lot ofunsafeand has not been properly audited for memory safety.

Privacy

The LSP server and the Code extension may access the network if the user configures it to import shaders from the internet.

Any other editor plugins are not under the control of the wgsl-analyzer developers.

For any privacy concerns, you should check with their respective developers.

For wgsl-analyzer developers, cargo xtask release uses the GitHub API to put together the release notes.

Features

Assists

Assists, or code actions, are small local refactorings available in a particular context.

They are usually triggered by a shortcut or by clicking a light bulb icon in the editor.

Cursor position or selection is signified by the ┃ character.

Diagnostics

Most errors and warnings provided by wgsl-analyzer come from wgsl-analyzer's own analysis.

Some of these diagnostics do not respect // wgsl-analyzer diagnostic control comments yet.

They can be turned off using the wgsl-analyzer.diagnostics.enable, wgsl-analyzer.diagnostics.experimental.enable, or wgsl-analyzer.diagnostics.disabled settings.

Editor Features

VS Code

Color configurations

It is possible to change the foreground/background color and font family/size of inlay hints.

Just add this to your settings.json:

{

"editor.inlayHints.fontFamily": "Courier New",

"editor.inlayHints.fontSize": 11,

"workbench.colorCustomizations": {

// Name of the theme you are currently using

"[Default Dark+]": {

"editorInlayHint.foreground": "#868686f0",

"editorInlayHint.background": "#3d3d3d48",

// Overrides for specific kinds of inlay hints

"editorInlayHint.typeForeground": "#fdb6fdf0",

"editorInlayHint.parameterForeground": "#fdb6fdf0",

}

}

}

Semantic style customizations

You can customize the look of different semantic elements in the source code.

For example, mutable bindings are underlined by default, and you can override this behavior by adding the following section to your settings.json:

{

"editor.semanticTokenColorCustomizations": {

"rules": {

"*.mutable": {

"fontStyle": "" // underline is the default

}

}

}

}

Most themes do not support styling unsafe operations differently yet.

You can fix this by adding overrides for the rules operator.unsafe, function.unsafe, and method.unsafe:

{

"editor.semanticTokenColorCustomizations": {

"rules": {

"operator.unsafe": "#ff6600",

"function.unsafe": "#ff6600",

"method.unsafe": "#ff6600"

}

}

}

In addition to the top-level rules, you can specify overrides for specific themes. For example, if you wanted to use a darker text color on a specific light theme, you might write:

{

"editor.semanticTokenColorCustomizations": {

"rules": {

"operator.unsafe": "#ff6600"

},

"[Ayu Light]": {

"rules": {

"operator.unsafe": "#572300"

}

}

}

}

Make sure you include the brackets around the theme name.

For example, use "[Ayu Light]" to customize the theme Ayu Light.

Special when clause context for keybindings

You may use the inWeslProject context to configure keybindings for WGSL/WESL projects only.

For example:

{

"key": "ctrl+alt+d",

"command": "wgsl-analyzer.openDocs",

"when": "inWeslProject"

}

More about when clause contexts.

Setting runnable environment variables

You can use the wgsl-analyzer.runnables.extraEnv setting to define runnable environment-specific substitution variables.

The simplest way for all runnables in a bunch:

"wgsl-analyzer.runnables.extraEnv": {

"RUN_SLOW_TESTS": "1"

}

Or it is possible to specify vars more granularly:

"wgsl-analyzer.runnables.extraEnv": [

{

// "mask": null, // null mask means that this rule will be applied for all runnables

"env": {

"APP_ID": "1",

"APP_DATA": "asdf"

}

},

{

"mask": "test_name",

"env": {

"APP_ID": "2" // overwrites only APP_ID

}

}

]

You can use any valid regular expression as a mask.

Also, note that a full runnable name is something like run bin_or_example_name, test some::mod::test_name, or test-mod some::mod.

It is possible to distinguish binaries, single tests, and test modules with these masks: "^run", "^test " (the trailing space matters!), and "^test-mod" respectively.

If needed, you can set different values for different platforms:

"wgsl-analyzer.runnables.extraEnv": [

{

"platform": "win32", // windows only

"env": {

"APP_DATA": "windows specific data"

}

},

{

"platform": ["linux"],

"env": {

"APP_DATA": "linux data"

}

},

{ // for all platforms

"env": {

"APP_COMMON_DATA": "xxx"

}

}

]

Compiler feedback from external commands

You can configure VS Code to run a command in the background and use the $wgsl-analyzer-watch problem matcher to generate inline error markers from its output.

To do this, you need to create a new VS Code Task and set "wgsl-analyzer.checkOnSave": false in preferences.

Example .vscode/tasks.json:

{

"label": "Watch",

"group": "build",

"type": "shell",

"command": "example-tool watch",

"problemMatcher": "$wgsl-analyzer-watch",

"isBackground": true

}

Live Share

VS Code Live Share has partial support for wgsl-analyzer.

Live Share requires the official Microsoft build of VS Code; OSS builds will not work correctly.

The host's wgsl-analyzer instance will be shared with all guests joining the session.

The guests do not have to have the wgsl-analyzer extension installed for this to work.

If you are joining a Live Share session and do have wgsl-analyzer installed locally, then commands from the command palette will not work correctly.

This is because they will attempt to communicate with the local server, not the server of the session host.

Contributing Quick Start

wgsl-analyzer is an ordinary Rust project, which is organized as a Cargo workspace, builds on stable, and does not depend on C libraries.

Simply run the following to get started:

cargo test

To learn more about how wgsl-analyzer works, see Architecture.

It also explains the high-level layout of the source code.

Do skim through that document.

We also publish rustdoc docs to pages: https://wgsl-analyzer.github.io/wgsl-analyzer/ide. Note that the internal documentation is very incomplete.

Various organizational and process issues are discussed in this document.

Getting in Touch

Discussion happens in this Discord server:

Issue Labels

https://github.com/wgsl-analyzer/wgsl-analyzer/labels

- [A-Analyzer]: Affects the wgsl-analyzer crate

- [A-Base-DB]: Affects the base_db crate

- [A-Build-System]: CI stuff

- [A-Completion]: Affects the ide_completion crate

- [A-Cross-Cutting]: Affects many crates

- [A-Formatter]: Affects the wgsl-formatter crate

- [A-HIR]: Affects the hir or hir_def crate

- [A-IDE]: Affects the ide crate

- [A-Meta]: Affects non-code files such as documentation

- [A-wgslfmt]: Affects the wgslfmt crate

- [C-Bug]: Something isn't working

- [C-Dependencies]: Bump and migrate a dependency

- [C-Documentation]: Improvements or additions to documentation

- [C-Enhancement]: Improvement over an existing feature

- [C-Feature]: New feature or request

- [D-Complex]: Large implications, lots of changes, much thought

- [D-Modest]: "Normal" difficulty of solving

- [D-Straightforward]: Relatively easy to solve

- [D-Trivial]: Good for newcomers

- [S-Adopt-Me]: Extra attention is needed

- [S-Blocked]: Blocked on something else happening

- [S-Duplicate]: This issue or pull request already exists

- [S-Needs-Design]: The way this should be done is not yet clear

- [S-Needs-Investigation]: The cause of the issue is TBD

- [S-Needs-Triage]: Hasn't been triaged yet

- [S-Ready-to-Implement]: This issue is actionable and a solution can be proposed

- [S-Ready-to-Review]: This change is in a good state and needs someone (anyone!) to review it

- [S-Waiting-on-Author]: A change or a response from the author is needed

- [S-Won't-Fix]: This will not be worked on

Code Style & Review Process

See the Style Guide.

Cookbook

CI

We use GitHub Actions for CI.

Most of the things, including formatting, are checked by cargo test.

If cargo test passes locally, that is a good sign that CI will be green as well.

The only exception is that some long-running tests are skipped locally by default.

Use env RUN_SLOW_TESTS=1 cargo test to run the full suite.

We use bors to enforce the not rocket science rule.

Launching wgsl-analyzer

Debugging the language server can be tricky. LSP is rather chatty, so driving it from the command line is not really feasible, driving it via VS Code requires interacting with two processes.

For this reason, the best way to see how wgsl-analyzer works is to find a relevant test and execute it.

Launching a VS Code instance with a locally built language server is also possible. There is "Run Extension (Debug Build)" launch configuration for this in VS Code.

In general, I use one of the following workflows for fixing bugs and implementing features:

If the problem concerns only internal parts of wgsl-analyzer (i.e. I do not need to touch the wgsl-analyzer crate or TypeScript code), there is a unit-test for it.

So, I use wgsl-analyzer: Run action in VS Code to run this single test, and then just do printf-driven development/debugging.

As a sanity check after I am done, I use cargo xtask install --server and Reload Window action in VS Code to verify that the thing works as I expect.

If the problem concerns only the VS Code extension, I use Run Installed Extension launch configuration from launch.json.

Notably, this uses the usual wgsl-analyzer binary from PATH.

For this, it is important to have the following in your settings.json file:

{

"wgsl-analyzer.server.path": "wgsl-analyzer"

}

After I am done with the fix, I use cargo xtask install --client to try the new extension for real.

If I need to fix something in the wgsl-analyzer crate, I feel sad because it is on the boundary between the two processes, and working there is slow.

I usually just cargo xtask install --server and poke changes from my live environment.

Note that this uses --release, which is usually faster overall, because loading stdlib into debug version of wgsl-analyzer takes a lot of time.

Note that you should only use the eprint! family of macros for debugging: stdout is used for LSP communication, and print! would break it.

If I need to fix something simultaneously in the server and in the client, I feel even more sad. I do not have a specific workflow for this case.

TypeScript Tests

If you change files under editors/code and would like to run the tests and linter, install npm and run:

cd editors/code

npm ci

npm run ci

Run npm run to see all available scripts.

How to

- ... add an assist? #7535

- ... add a new protocol extension? #4569

- ... add a new configuration option? #7451

- ... add a new completion? #6964

- ... allow new syntax in the parser? #7338

Logging

Logging is done by both wgsl-analyzer and VS Code, so it might be tricky to figure out where logs go.

Inside wgsl-analyzer, we use the tracing crate for logging, and tracing-subscriber for logging frontend.

By default, log goes to stderr, but the stderr itself is processed by VS Code.

--log-file <PATH> CLI argument allows logging to file.

Setting the WA_LOG_FILE=<PATH> environment variable will also log to file, it will also override --log-file.

To see stderr in the running VS Code instance, go to the "Output" tab of the panel and select wgsl-analyzer.

This shows eprintln! as well.

Note that stdout is used for the actual protocol, so println! will break things.

To log all communication between the server and the client, there are two choices:

-

You can log on the server side, by running something like

env WA_LOG=lsp_server=debug code . -

You can log on the client side, by the

wgsl-analyzer: Toggle LSP Logscommand or enabling"wgsl-analyzer.trace.server": "verbose"workspace setting. These logs are shown in a separate tab in the output and could be used with LSP inspector. Kudos to @DJMcNab for setting this awesome infra up!

There are also several VS Code commands which might be of interest:

-

wgsl-analyzer: Statusshows some memory-usage statistics. -

wgsl-analyzer: View Hirshows the HIR expressions within the function containing the cursor. -

If

wgsl-analyzer.showSyntaxTreeis enabled in settings,WGSL/WESL Syntax Tree: Focus on WGSL/WESL Syntax Tree Viewshows the syntax tree of the current file.You can click on nodes in the WGSL/WESL editor to go to the corresponding syntax node.

You can click on

Reveal Syntax Elementnext to a syntax node to go to the corresponding code and highlight the proper text range.If you trigger Go to Definition in the inspected source file, the syntax tree view should scroll to and select the appropriate syntax node token.

You can click on

Copynext to a syntax node to copy a text representation of the node.

Profiling

We have a built-in hierarchical profiler, you can enable it by using WA_PROFILE env-var:

WA_PROFILE=* // dump everything

WA_PROFILE=foo|bar|baz // enabled only selected entries

WA_PROFILE=*@3>10 // dump everything, up to depth 3, if it takes more than 10 ms

Some wgsl-analyzer contributors have export WA_PROFILE='*>10' in their shell profile.

For machine-readable JSON output, we have the WA_PROFILE_JSON env variable.

We support filtering only by span name:

WA_PROFILE=* // dump everything

WA_PROFILE_JSON="vfs_load|parallel_prime_caches|discover_command" // dump selected spans

We also have a "counting" profiler which counts number of instances of popular structs.

It is enabled by WA_COUNT=1.

Release Process

Release process is handled by release, dist, publish-release-notes and promote xtasks, release being the main one.

release assumes that you have checkouts of wgsl-analyzer and wgsl-analyzer.github.io in the same directory:

./wgsl-analyzer

./wgsl-analyzer.github.io

The remote for wgsl-analyzer must be called upstream (I use origin to point to my fork).

release calls the GitHub API calls to scrape pull request comments and categorize them in the changelog.

This step uses the curl and jq applications, which need to be available in PATH.

Finally, you need to obtain a GitHub personal access token and set the GITHUB_TOKEN environment variable.

Release steps:

- Set the

GITHUB_TOKENenvironment variable. - Inside wgsl-analyzer, run

cargo xtask release. This will:- checkout the

releasebranch - reset it to

upstream/nightly - push it to

upstream. This triggers GitHub Actions which:- runs

cargo xtask distto package binaries and VS Code extension - makes a GitHub release

- publishes the VS Code extension to the marketplace

- call the GitHub API for PR details

- create a new changelog in

wgsl-analyzer.github.io

- runs

- checkout the

- While the release is in progress, fill in the changelog.

- Commit & push the changelog.

- Run

cargo xtask publish-release-notes <CHANGELOG>-- this will convert the changelog entry in AsciiDoc to Markdown and update the body of GitHub Releases entry.

If the GitHub Actions release fails because of a transient problem like a timeout, you can re-run the job from the Actions console.

If it fails because of something that needs to be fixed, remove the release tag (if needed), fix the problem, then start over.

Make sure to remove the new changelog post created when running cargo xtask release a second time.

We release "nightly" every night automatically and promote the latest nightly to "stable" manually, every week.

We do not do "patch" releases, unless something truly egregious comes up.

To do a patch release, cherry-pick the fix on top of the current release branch and push the branch.

There is no need to write a changelog for a patch release, it is OK to include the notes about the fix into the next weekly one.

Note: we tag releases by dates, releasing a patch release on the same day should work (by overwriting a tag), but I am not 100% sure.

Permissions

Triage Team

We have a dedicated triage team that helps manage issues and pull requests on GitHub. Members of the triage team have permissions to:

- Label issues and pull requests

- Close and reopen issues

- Assign issues and PRs to milestones

This team plays a crucial role in ensuring that the project remains organized and that contributions are properly reviewed and addressed.

Architecture

This document describes the high-level architecture of wgsl-analyzer. If you want to familiarize yourself with the code base, you are just in the right place!

Since wgsl-analyzer is largely copied from rust-analyzer, you might also enjoy the Explaining Rust Analyzer series on YouTube.

It goes deeper than what is covered in this document, but will take some time to watch.

See also these implementation-related blog posts:

- https://rust-analyzer.github.io/blog/2019/11/13/find-usages.html

- https://rust-analyzer.github.io/blog/2020/07/20/three-architectures-for-responsive-ide.html

- https://rust-analyzer.github.io/blog/2020/09/16/challeging-LR-parsing.html

- https://rust-analyzer.github.io/blog/2020/09/28/how-to-make-a-light-bulb.html

- https://rust-analyzer.github.io/blog/2020/10/24/introducing-ungrammar.html

For older, by now mostly outdated stuff, see the guide and another playlist.

Bird's Eye View

- Entry Points

- Code Map

xtaskeditors/codelibcrates/parsercrates/syntaxcrates/base-dbcrates/hir-def,crates/hir_tycrates/hircrates/ide,crates/ide-db,crates/ide-assists,crates/ide-completion,crates/ide-diagnostics,crates/ide-ssrcrates/wgsl-analyzercrates/toolchain,crates/project-model,crates/flycheckcrates/cfgcrates/vfs,crates/vfs-notify,crates/pathscrates/stdxcrates/profilecrates/span

- Cross-Cutting Concerns

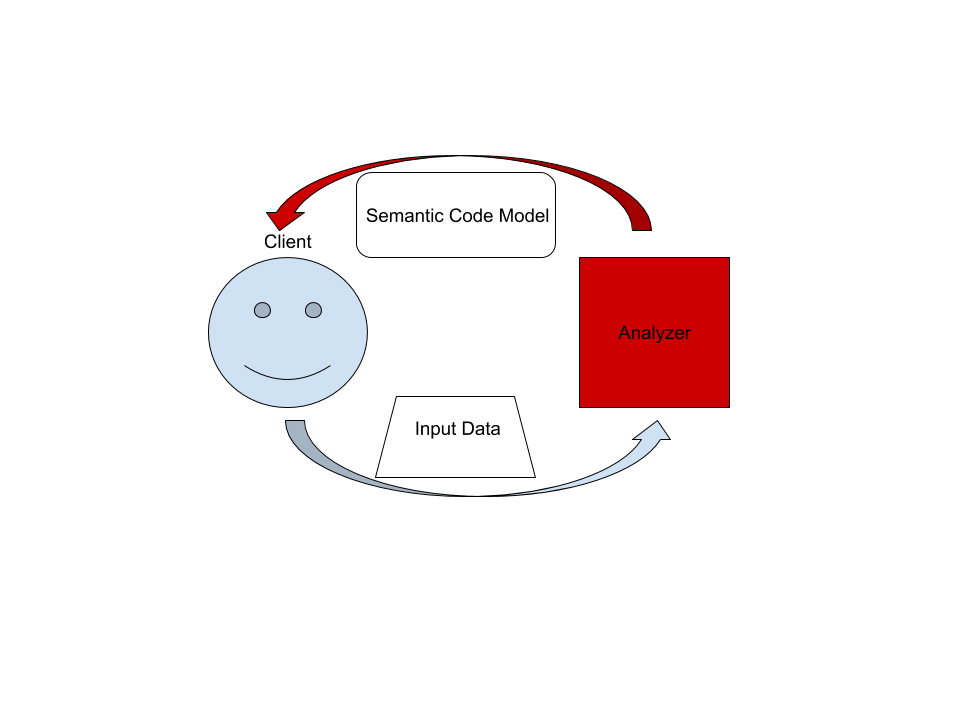

On the highest level, wgsl-analyzer is a thing which accepts input source code from the client and produces a structured semantic model of the code.

More specifically, input data consists of a set of test files ((PathBuf, String) pairs) and information about project structure, captured in the so-called CrateGraph.

The crate graph specifies which files are crate roots, which cfg flags are specified for each crate, and what dependencies exist between the crates.

This is the input (ground) state.

The analyzer keeps all this input data in memory and never does any IO.

Because the input data is source code, which typically measures in tens of megabytes at most, keeping everything in memory is OK.

A "structured semantic model" is basically an object-oriented representation of modules, functions, and types which appear in the source code. This representation is fully "resolved": all expressions have types, all references are bound to declarations, etc. This is derived state.

The client can submit a small delta of input data (typically, a change to a single file) and get a fresh code model which accounts for changes.

The underlying engine makes sure that the model is computed lazily (on-demand) and can be quickly updated for small modifications.

Entry Points

crates/wgsl-analyzer/src/bin/main.rs contains the main function which spawns LSP.

This is the entry point, but it front-loads a lot of complexity, so it is fine to just skim through it.

crates/wgsl-analyzer/src/handlers/request.rs implements all LSP requests and is a great place to start if you are already familiar with LSP.

Analysis and AnalysisHost types define the main API for consumers of IDE services.

Code Map

This section talks briefly about various important directories and data structures. Pay attention to the Architecture Invariant sections. They often talk about things which are deliberately absent in the source code.

Note also which crates are API Boundaries. Remember, rules at the boundary are different.

xtask

This is wgsl-analyzer's "build system".

We use cargo to compile Rust code, but there are also various other tasks, such as release management or local installation.

Those are handled by Rust code in the xtask directory.

editors/code

The VS Code extension.

lib

wgsl-analyzer-independent libraries which we publish to crates.io.

It is not heavily utilized at the moment.

crates/parser

Architecture Invariant: the parser is independent of the particular tree structure and particular representation of the tokens.

It transforms one flat stream of events into another flat stream of events.

Token independence allows us to parse out both text-based source code and tt-based macro input.

Tree independence allows us to more easily vary the syntax tree implementation.

It should also unlock efficient light-parsing approaches.

For example, you can extract the set of names defined in a file (for typo correction) without building a syntax tree.

Architecture Invariant: parsing never fails, the parser produces (T, Vec<Error>) rather than Result<T, Error>.

crates/syntax

WESL syntax tree structure and parser.

See RFC and ./syntax.md for some design notes.

rowanlibrary is used for constructing syntax trees.astprovides a type safe API on top of the rawrowantree.ungrammardescription of the grammar, which is used to generatesyntax_kindsandastmodules, usingcargo test -p xtaskcommand.

Tests for wa_syntax are mostly data-driven.

test_data/parser contains subdirectories with a bunch of .rs (test vectors) and .txt files with corresponding syntax trees.

During testing, we check .rs against .txt.

If the .txt file is missing, it is created (this is how you update tests).

Additionally, running the xtask test suite with cargo test -p xtask will walk the grammar module and collect all // test test_name comments into files inside test_data/parser/inline directory.

To update test data, run with UPDATE_EXPECT variable:

env UPDATE_EXPECT=1 cargo qt

After adding a new inline test you need to run cargo test -p xtask and also update the test data as described above.

Note api_walkthrough

in particular: it shows off various methods of working with syntax tree.

See #TODO for an example PR which fixes a bug in the grammar.

Architecture Invariant: syntax crate is completely independent from the rest of wgsl-analyzer.

It knows nothing about salsa or LSP.

This is important because it is possible to make useful tooling using only the syntax tree.

Without semantic information, you do not need to be able to build code, which makes the tooling more robust.

See also https://mlfbrown.com/paper.pdf.

You can view the syntax crate as an entry point to wgsl-analyzer.

syntax crate is an API Boundary.

Architecture Invariant: syntax tree is a value type. The tree is fully determined by the contents of its syntax nodes, it does not need global context (like an interner) and does not store semantic info. Using the tree as a store for semantic info is convenient in traditional compilers, but does not work nicely in the IDE. Specifically, assists, and refactors require transforming syntax trees, and that becomes awkward if you need to do something with the semantic info.

Architecture Invariant: syntax tree is built for a single file. This is to enable parallel parsing of all files.

Architecture Invariant: Syntax trees are by design incomplete and do not enforce well-formedness.

If an AST method returns an Option, it can be None at runtime, even if this is forbidden by the grammar.

crates/base-db

We use the salsa crate for incremental and on-demand computation.

Roughly, you can think of salsa as a key-value store, but it can also compute derived values using specified functions.

The base-db crate provides basic infrastructure for interacting with salsa.

Crucially, it defines most of the "input" queries: facts supplied by the client of the analyzer.

Reading the docs of the base_db::input module should be useful: everything else is strictly derived from those inputs.

Architecture Invariant: particularities of the build system are not the part of the ground state.

In particular, base-db knows nothing about cargo.

For example, cfg flags are a part of base_db, but features are not.

A foo feature is a Cargo-level concept, which is lowered by Cargo to --cfg feature=foo argument on the command line.

The CrateGraph structure is used to represent the dependencies between the crates abstractly.

Architecture Invariant: base-db does not know about file system and file paths.

Files are represented with opaque FileId, there is no operation to get an std::path::Path out of the FileId.

crates/hir-def, crates/hir_ty

These crates are the brain of wgsl-analyzer. This is the compiler part of the IDE.

hir-xxx crates have a strong ECS flavor, in that they work with raw ids and directly query the database.

There is very little abstraction here.

These crates integrate deeply with salsa and chalk.

Name resolution and type inference all happen here. These crates also define various intermediate representations of the core.

ItemTree condenses a single SyntaxTree into a "summary" data structure, which is stable over modifications to function bodies.

DefMap contains the module tree of a crate and stores module scopes.

Body stores information about expressions.

Architecture Invariant: these crates are not, and will never be, an api boundary.

Architecture Invariant: these crates explicitly care about being incremental.

The core invariant we maintain is "typing inside a function's body never invalidates global derived data".

i.e., if you change the body of foo, all facts about bar should remain intact.

Architecture Invariant: hir exists only in context of particular crate instance with specific CFG flags. The same syntax may produce several instances of HIR if the crate participates in the crate graph more than once.

crates/hir

The top-level hir crate is an API Boundary.

If you think about "using wgsl-analyzer as a library", hir crate is most likely the interface that you will be talking to.

It wraps ECS-style internal API into a more OO-flavored API (with an extra db argument for each call).

Architecture Invariant: hir provides a static, fully resolved view of the code.

While internal hir-* crates compute things, hir, from the outside, looks like an inert data structure.

hir also handles the delicate task of going from syntax to the corresponding hir.

Remember that the mapping here is one-to-many.

See Semantics type and source_to_def module.

Note in particular a curious recursive structure in source_to_def.

We first resolve the parent syntax node to the parent hir element.

Then we ask the hir parent what syntax children does it have.

Then we look for our node in the set of children.

This is the heart of many IDE features, like goto definition, which start with figuring out the hir node at the cursor. This is some kind of (yet unnamed) uber-IDE pattern, as it is present in Roslyn and Kotlin as well.

crates/ide, crates/ide-db, crates/ide-assists, crates/ide-completion, crates/ide-diagnostics, crates/ide-ssr

The ide crate builds on top of hir semantic model to provide high-level IDE features like completion or goto definition.

It is an API Boundary.

If you want to use IDE parts of wgsl-analyzer via LSP, custom flatbuffers-based protocol or just as a library in your text editor, this is the right API.

Architecture Invariant: ide crate's API is build out of POD types with public fields.

The API uses editor's terminology, it talks about offsets and string labels rather than in terms of definitions or types.

It is effectively the view in MVC and viewmodel in MVVM.

All arguments and return types are conceptually serializable.

In particular, syntax trees and hir types are generally absent from the API (but are used heavily in the implementation).

Shout outs to LSP developers for popularizing the idea that "UI" is a good place to draw a boundary at.

ide is also the first crate which has the notion of change over time.

AnalysisHost is a state to which you can transactionally apply_change.

Analysis is an immutable snapshot of the state.

Internally, ide is split across several crates.

ide-assists, ide-completion, ide-diagnostics and ide-ssr implement large isolated features.

ide-db implements common IDE functionality (notably, reference search is implemented here).

The ide contains a public API, as well as implementation for a plethora of smaller features.

Architecture Invariant: ide crate strives to provide a perfect API.

Although at the moment it has only one consumer, the LSP server, LSP does not influence its API design.

Instead, we keep in mind a hypothetical ideal client - an IDE tailored specifically for WGSL and WESL, every nook and cranny of which is packed with language-specific goodies.

crates/wgsl-analyzer

This crate defines the wgsl-analyzer binary, so it is the entry point.

It implements the language server.

Architecture Invariant: wgsl-analyzer is the only crate that knows about LSP and JSON serialization.

If you want to expose a data structure X from ide to LSP, do not make it serializable.

Instead, create a serializable counterpart in wgsl-analyzer crate and manually convert between the two.

GlobalState is the state of the server.

The main_loop defines the server event loop, which accepts requests and sends responses.

Requests that modify the state or might block a user's typing are handled on the main thread.

All other requests are processed in background.

Architecture Invariant: the server is stateless, a-la HTTP.

Sometimes state needs to be preserved between requests.

For example, "what is the edit for the fifth completion item of the last completion edit?".

For this, the second request should include enough info to re-create the context from scratch.

This generally means including all the parameters of the original request.

reload module contains the code that handles configuration and Cargo.toml changes.

This is a tricky business.

Architecture Invariant: wgsl-analyzer should be partially available even when the build is broken.

Reloading process should not prevent IDE features from working.

crates/toolchain, crates/project-model, crates/flycheck

These crates deal with invoking cargo to learn about project structure and get compiler errors for the "check on save" feature.

They use crates/paths heavily instead of std::path.

A single wgsl-analyzer process can serve many projects, so it is important that the server's current working directory does not leak.

crates/cfg

This crate is responsible for parsing, evaluation, and general definition of cfg attributes.

crates/vfs, crates/vfs-notify, crates/paths

These crates implement a virtual file system. They provide consistent snapshots of the underlying file system and insulate messy OS paths.

Architecture Invariant: vfs does not assume a single unified file system.

i.e., a single wgsl-analyzer process can act as a remote server for two different machines, where the same /tmp/foo.rs path points to different files.

For this reason, all path APIs generally take some existing path as a "file system witness".

crates/stdx

This crate contains various non-wgsl-analyzer specific utils, which could have been in std, as well

as copies of unstable std items we would like to make use of already, like std::str::split_once.

crates/profile

This crate contains utilities for CPU and memory profiling.

crates/span

This crate exposes types and functions related to wgsl-analyzer's span for macros.

A span is effectively a text range relative to some item in a file with a given SyntaxContext (hygiene).

Cross-Cutting Concerns

This sections talks about the things which are everywhere and nowhere in particular.

Stability Guarantees

One of the reasons wgsl-analyzer moves relatively fast is that we do not introduce new stability guarantees.

Instead, as much as possible we leverage existing ones.

Examples:

- The

ideAPI ofwgsl-analyzeris explicitly unstable, but the LSP interface is stable, and here we just implement a stable API managed by someone else. - WGSL spec is almost stable, and it is the primary input to

wgsl-analyzer.

Exceptions:

- We ship some LSP extensions, and we try to keep those somewhat stable. Here, we need to work with a finite set of editor maintainers, so not providing rock-solid guarantees works.

Code generation

Some components in this repository are generated through automatic processes.

Generated code is updated automatically on cargo test.

Generated code is generally committed to the git repository.

In particular, we generate:

-

Various sections of the manual:

- features

- assists

- config

-

Documentation tests for assists

See the xtask\src\codegen\assists_doc_tests.rs module for details.

Cancellation

Suppose that the IDE is in the process of computing syntax highlighting when the user types foo.

What should happen?

wgsl-analyzers answer is that the highlighting process should be cancelled - its results are now stale, and it also blocks modification of the inputs.

The salsa database maintains a global revision counter.

When applying a change, salsa bumps this counter and waits until all other threads using salsa finish.

If a thread does salsa-based computation and notices that the counter is incremented, it panics with a special value (see Canceled::throw).

That is, wgsl-analyzer requires unwinding.

ide is the boundary where the panic is caught and transformed into a Result<T, Cancelled>.

Testing

wgsl-analyzer has three interesting system boundaries to concentrate tests on.

The outermost boundary is the wgsl-analyzer crate, which defines an LSP interface in terms of stdio.

We do integration testing of this component, by feeding it with a stream of LSP requests and checking responses.

These tests are known as "heavy", because they interact with Cargo and read real files from disk.

For this reason, we try to avoid writing too many tests on this boundary: in a statically typed language, it is hard to make an error in the protocol itself if messages are themselves typed.

Heavy tests are only run when RUN_SLOW_TESTS env var is set.

The middle, and most important, boundary is ide.

Unlike wgsl-analyzer, which exposes API, ide uses WGSL API and is intended for use by various tools.

A typical test creates an AnalysisHost, calls some Analysis functions and compares the results against expectation.

The innermost and most elaborate boundary is hir.

It has a much richer vocabulary of types than ide, but the basic testing setup is the same: we create a database, run some queries, assert result.

For comparisons, we use the expect crate for snapshot testing.

To test various analysis corner cases and avoid forgetting about old tests, we use so-called marks. See the cov_mark crate documentation for more.

Architecture Invariant: wgsl-analyzer tests do not use libcore or libstd.

All required library code must be a part of the tests.

This ensures fast test execution.

Architecture Invariant: tests are data driven and do not test the API. Tests which directly call various API functions are a liability, because they make refactoring the API significantly more complicated. Most of the tests look like this:

#[track_caller]

fn check(input: &str, expect: expect_test::Expect) {

// The single place that actually exercises a particular API

}

#[test]

fn foo() {

check("foo", expect![["bar"]]);

}

#[test]

fn spam() {

check("spam", expect![["eggs"]]);

}

// ...and a hundred more tests that do not care about the specific API at all.To specify input data, we use a single string literal in a special format, which can describe a set of WGSL files.

See the Fixture its module for fixture examples and documentation.

Architecture Invariant: all code invariants are tested by #[test] tests.

There is no additional checks in CI, formatting, and tidy tests are run with cargo test.

Architecture Invariant: tests do not depend on any kind of external resources, they are perfectly reproducible.

Performance Testing

TBA, take a look at the metrics xtask and #[test] fn benchmark_xxx() functions.

Error Handling

Architecture Invariant: core parts of wgsl-analyzer (ide/hir) do not interact with the outside world and thus cannot fail.

Only parts touching LSP are allowed to do IO.

Internals of wgsl-analyzer need to deal with broken code, but this is not an error condition.

wgsl-analyzer is robust: various analysis compute (T, Vec<Error>) rather than Result<T, Error>.

wgsl-analyzer is a complex, long-running process.

It will always have bugs and panics;

to mitigate this, a panic in an isolated feature should not bring down the whole process.

Each LSP-request is protected by a catch_unwind.

We use always and never macros instead of assert to gracefully recover from impossible conditions.

Observability

wgsl-analyzer is a long-running process, so it is important to understand what happens inside.

We have several instruments for that.

The event loop that runs wgsl-analyzer is very explicit.

Rather than spawning futures or scheduling callbacks (open), the event loop accepts an enum of possible events (closed).

It is easy to see all the things that trigger wgsl-analyzer processing together with their performance.

wgsl-analyzer includes a simple hierarchical profiler (hprof).

It is enabled with WA_PROFILE='*>50' env var (log all (*) actions which take more than 50 ms) and produces output like:

85ms - handle_completion

68ms - import_on_the_fly

67ms - import_assets::search_for_relative_paths

0ms - crate_def_map:wait (804 calls)

0ms - find_path (16 calls)

2ms - find_similar_imports (1 calls)

0ms - generic_params_query (334 calls)

59ms - trait_solve_query (186 calls)

0ms - Semantics::analyze_impl (1 calls)

1ms - render_resolution (8 calls)

0ms - Semantics::analyze_impl (5 calls)

This is cheap enough to enable in production.

Similarly, we save live object counting (WA_COUNT=1).

It is not cheap enough to enable in production, and this is a bug which should be fixed.

Configurability

wgsl-analyzer strives to be as configurable as possible while offering reasonable defaults where no configuration exists yet.

The rule of thumb is to enable most features by default unless they are buggy or degrade performance too much.

There will always be features that some people find more annoying than helpful, so giving the users the ability to tweak or disable these is a big part of offering a good user experience.

Enabling them by default is a matter of discoverability, as many users do not know about some features even though they are presented in the manual.

Mind the code-architecture gap: at the moment, we are using fewer feature flags than we really should.

Debugging VS Code plugin and the language server

Prerequisites

- Install LLDB and the LLDB Extension.

- Open the root folder in VS Code. Here you can access the preconfigured debug setups.

-

Install all TypeScript dependencies

cd editors/code npm ci

Common knowledge

- All debug configurations open a new

[Extension Development Host]VS Code instance where only thewgsl-analyzerextension being debugged is enabled. - To activate the extension you need to open any WESL project's folder in

[Extension Development Host].

Debug TypeScript VS Code extension

Run Installed Extension- runs the extension with the globally installedwgsl-analyzerbinary.Run Extension (Debug Build)- runs extension with the locally built LSP server (target/debug/wgsl-analyzer).

TypeScript debugging is configured to watch your source edits and recompile.

To apply changes to an already running debug process, press Ctrl+Shift+P

and run the following command in your [Extension Development Host]

> Developer: Reload Window

Debugging the LSP server

-

When attaching a debugger to an already running

wgsl-analyzerserver on Linux, you might need to enableptracefor unrelated processes by running:echo 0 | sudo tee /proc/sys/kernel/yama/ptrace_scope -

By default, the LSP server is built without debug information. To enable it, you will need to change

Cargo.toml:[profile.dev] debug = 2

- Select

Run Extension (Debug Build)to run your locally builttarget/debug/wgsl-analyzer. - In the original VS Code window once again select the

Attach To Serverdebug configuration. - A list of running processes should appear. Select the

wgsl-analyzerfrom this repo. - Navigate to

crates/wgsl-analyzer/src/main_loop.rsand add a breakpoint to theon_requestfunction. - Go back to the

[Extension Development Host]instance and hover over a Rust variable and your breakpoint should hit.

If you need to debug the server from the very beginning, including its initialization

code, you can use the --wait-dbg command line argument or WA_WAIT_DBG environment variable.

The server will spin at the beginning of the try_main function (see crates\wgsl-analyzer\src\bin\main.rs)

let mut d = 4;

while d == 4 { // set a breakpoint here and change the value

d = 4;

}However for this to work, you will need to enable debug_assertions in your build

RUSTFLAGS='--cfg debug_assertions' cargo build --release

Demo

- Debugging TypeScript VScode extension.

- Debugging the LSP server (rust-analyzer, same advice applies).

Troubleshooting

Cannot find the wgsl-analyzer process

It could be a case of just jumping the gun.

Make sure you open a WGSL or WESL file in the [Extension Development Host] and try again.

Cannot connect to wgsl-analyzer

Make sure you have run echo 0 | sudo tee /proc/sys/kernel/yama/ptrace_scope.

By default this should reset back to 1 every time you log in.

Breakpoints are never being hit

Check your version of lldb.

If it is version 6 and lower, use the classic adapter type.

It is lldb.adapterType in settings file.

If you are running lldb version 7, change the lldb adapter type to bundled or native.

Guide to wgsl-analyzer

About the guide

This guide describes the current state of wgsl-analyzer as of the 2025-xx-xx release (git tag 2025-xx-xx).

Its purpose is to document various problems and architectural solutions related to the problem of building an IDE-first compiler for Rust.

- The big picture

- IDE API

- Inputs

- Source roots (a.k.a. "Filesystems are horrible")

- Language Server Protocol

- Salsa

- Salsa Input Queries

- From text to semantic model

- Syntax trees

- Building a Module Tree

- Location Interner pattern

- Macros and recursive locations

- Name resolution

- Source Map pattern

- Type inference

- Tying it all together: completion

The big picture

On the highest possible level, rust-analyzer is a stateful component.

A client may apply changes to the analyzer (new contents of foo.rs file is "fn main() {}") and it may ask semantic questions about the current state (what is the definition of the identifier with offset 92 in file bar.rs?).

Two important properties hold:

-

Analyzer does not do any I/O. It starts in an empty state and all input data is provided via

apply_changeAPI. -

Only queries about the current state are supported. One can, of course, simulate undo and redo by keeping a log of changes and inverse changes respectively.

IDE API

To see the bigger picture of how the IDE features work, examine the AnalysisHost and Analysis pair of types.

AnalysisHost has three methods:

default()for creating an empty analysis instanceapply_change(&mut self)to make changes (this is how you get from an empty state to something interesting)analysis(&self)to get an instance ofAnalysis

Analysis has a ton of methods for IDEs, like goto_definition, or completions.

Both inputs and outputs of Analysis' methods are formulated in terms of files and offsets, and not in terms of Rust concepts like structs, traits, etc.

The "typed" API with Rust-specific types is slightly lower in the stack, we will talk about it later.

The reason for this separation of Analysis and AnalysisHost is that we want to apply changes "uniquely", but we might also want to fork an Analysis and send it to another thread for background processing.

That is, there is only a single AnalysisHost, but there may be several (equivalent) Analysis.

Note that all of the Analysis API return Cancellable<T>.

This is required to be responsive in an IDE setting.

Sometimes a long-running query is being computed and the user types something in the editor and asks for completion.

In this case, we cancel the long-running computation (so it returns Err(Cancelled)), apply the change and execute the request for completion.

We never use stale data to answer requests.

Under the cover, AnalysisHost "remembers" all outstanding Analysis instances.

The AnalysisHost::apply_change method cancels all Analysises, blocks until all of them are Dropped and then applies changes in-place.

This may be familiar to Rustaceans who use read-write locks for interior mutability.

Next, the inputs to the Analysis are discussed in detail.

Inputs

rust-analyzer never does any I/O itself.

All inputs get passed explicitly via the AnalysisHost::apply_change method, which accepts a single argument, a Change.

Change is a wrapper for FileChange that adds proc-macro knowledge.

FileChange is a builder for a single change "transaction," so it suffices to study its methods to understand all the input data.

The change_file method controls the set of the input files, where each file has an integer id (FileId, picked by the client) and text (Option<Arc<str>>).

Paths are tricky; they will be explained below, in the source roots section, together with the set_roots method.

The "source root" is_library flag along with the concept of durability allows us to add a group of files that are assumed to rarely change.

It is mostly an optimization and does not change the fundamental picture.

The set_crate_graph method allows us to control how the input files are partitioned into compilation units -- crates.

It also controls (in theory, not implemented yet) cfg flags.

CrateGraph is a directed acyclic graph of crates.

Each crate has a root FileId, a set of active cfg flags, and a set of dependencies.

Each dependency is a pair of a crate and a name.

It is possible to have two crates with the same root FileId but different cfg-flags/dependencies.

This model is lower than Cargo's model of packages: each Cargo package consists of several targets, each of which is a separate crate (or several crates, if you try different feature combinations).

Procedural macros are inputs as well, roughly modeled as a crate with a bunch of additional black box dyn Fn(TokenStream) -> TokenStream functions.

Next, the process of building an LSP server on top of Analysis is discussed.

However, before that, it is important to address the issue with paths.

Source roots (a.k.a. "Filesystems are horrible")

This is a non-essential section, feel free to skip.

The previous section said that the filesystem path is an attribute of a file, but this is not the whole truth.

Making it an absolute PathBuf will be bad for several reasons.

First, filesystems are full of (platform-dependent) edge cases:

- It is hard (requires a syscall) to decide if two paths are equivalent.

- Some filesystems are case-sensitive (e.g. macOS).

- Paths are not necessarily UTF-8.

- Symlinks can form cycles.

Second, this might hurt the reproducibility and hermeticity of builds.

In theory, moving a project from /foo/bar/my-project to /spam/eggs/my-project should not change a bit in the output.

However, if the absolute path is a part of the input, it is at least in theory observable, and could affect the output.

Yet another problem is that we really really want to avoid doing I/O, but with Rust the set of "input" files is not necessarily known up-front.

In theory, you can have #[path="/dev/random"] mod foo;.

To solve (or explicitly refuse to solve) these problems rust-analyzer uses the concept of a "source root".

Roughly speaking, source roots are the contents of a directory on a file system, like /home/matklad/projects/rustraytracer/**.rs.

More precisely, all files (FileIds) are partitioned into disjoint SourceRoots.

Each file has a relative UTF-8 path within the SourceRoot.

SourceRoot has an identity (integer ID).

Crucially, the root path of the source root itself is unknown to the analyzer: A client is supposed to maintain a mapping between SourceRoot IDs (which are assigned by the client) and actual PathBufs.

SourceRoots give a sane tree model of the file system to the analyzer.

Note that mod, #[path] and include!() can only reference files from the same source root.

It is of course possible to explicitly add extra files to the source root, even /dev/random.

Language Server Protocol

The Analysis API is exposed via the JSON RPC-based language server protocol.

The hard part here is managing changes (which can come either from the file system or from the editor) and concurrency (we want to spawn background jobs for things like syntax highlighting).

We use the event loop pattern to manage the zoo, and the loop is the GlobalState::run function initiated by main_loop after GlobalState::new does a one-time initialization and tearing down of the resources.

A typical analyzer session involves several steps.

First, we need to figure out what to analyze.

To do this, we run cargo metadata to learn about Cargo packages for the current workspace and dependencies, and we run rustc --print sysroot and scan the "sysroot" (the directory containing the current Rust toolchain's files) to learn about crates like std.

This happens in the GlobalState::fetch_workspaces method.

We load this configuration at the start of the server in GlobalState::new, but it is also triggered by workspace change events and requests to reload the workspace from the client.

The ProjectModel we get after this step is very Cargo and sysroot specific, it needs to be lowered to get the input in the form of Change.

This happens in the GlobalState::process_changes method.

Specifically:

- Create

SourceRoots for each Cargo package(s) and sysroot. - Schedule a filesystem scan of the roots.

- Create an analyzer's

Cratefor each Cargo target and sysroot crate. - Set up dependencies between the crates.

The results of the scan (which may take a while) will be processed in the body of the main loop, just like any other change. Here, the following are handled:

After a single loop's turn, we group the changes into one Change and apply it.

This always happens on the main thread and blocks the loop.

To handle requests, like "goto definition", we create an instance of the Analysis and schedule the task (which consumes Analysis) on the thread pool.

The task calls the corresponding Analysis method, while massaging the types into the LSP representation.

Keep in mind that if we are executing "goto definition" on the thread pool and a new change comes in, the task will be canceled as soon as the main loop calls apply_change on the AnalysisHost.

This concludes the overview of the analyzer's programming interface. Next, explore the implementation details.

Salsa

The most straightforward way to implement an "apply change, get analysis, repeat" API would be to maintain the input state and to compute all possible analysis information from scratch after every change. This works, but scales poorly with the size of the project. To make this fast, we need to take advantage of the fact that most of the changes are small, and that analysis results are unlikely to change significantly between invocations.

To do this we use salsa: a framework for incremental on-demand computation.

You can skip the rest of the section if you are familiar with rustc's red-green algorithm (which is used for incremental compilation).

It is better to refer to salsa's docs to learn about it. Here is a small excerpt:

The key idea of salsa is that you define your program as a set of queries.

Every query is used like a function K -> V that maps from some key of type K to a value of type V.

Queries come in two basic varieties:

-

Inputs: the base inputs to your system. You can change these whenever you like.

-

Functions: pure functions (no side effects) that transform your inputs into other values. The results of queries are memoized to avoid recomputing them a lot. When you make changes to the inputs, we will figure out (fairly intelligently) when we can reuse these memoized values and when we have to recompute them.

For further discussion, it's important to understand one bit of "fairly intelligently".

Suppose we have two functions, f1 and f2, and one input, z.

We call f1(X) which in turn calls f2(Y) which inspects i(Z).

i(Z) returns some value V1, f2 uses that and returns R1, f1 uses that and returns O.

Now, suppose i at Z is changed to V2 from V1.

Try to compute f1(X) again.

Because f1(X) (transitively) depends on i(Z), we cannot just reuse its value as is.

However, if f2(Y) is still equal to R1 (despite i's change), we, in fact, can reuse O as the result of f1(X).

And that is how salsa works: it recomputes results in reverse order, starting from inputs and progressing towards outputs, stopping as soon as it sees an intermediate value that has not changed.

If this sounds confusing to you, do not worry: it is confusing.

This illustration by @killercup might help:

Salsa Input Queries

All analyzer information is stored in a salsa database.

Analysis and AnalysisHost types are essentially newtype wrappers for RootDatabase -- a salsa database.

Salsa input queries are defined in SourceDatabase and SourceDatabaseExt (which are a part of RootDatabase).

They closely mirror the familiar Change structure: indeed, what apply_change does is it sets the values of input queries.

From text to semantic model

The bulk of the rust-analyzer is transforming input text into a semantic model of Rust code: a web of entities like modules, structs, functions, and traits.

An important fact to realize is that (unlike most other languages like C# or Java) there is not a one-to-one mapping between the source code and the semantic model.

A single function definition in the source code might result in several semantic functions: for example, the same source file might get included as a module in several crates or a single crate might be present in the compilation DAG several times, with different sets of cfgs enabled.